Pathom Updates 05, Speed check!

December 23, 2020

Hello everyone! Time for another round of updates! Before I start, I like to mention and thank Cognitect and Nubank for sponsoring my work. I feel honored to be a participant on their initiative, if you didn’t hear about it check this post from Rich Hickey.

Datafy Smart Maps

To start this update, Smart Maps now support Datafy and Navigation protocols!

This means that if you use a tool like Reveal or REBL you can lazily navigate the Smart Map possibilities!

Here you can see a demo exploring data on Reveal:

Now in REBL:

Benchmarks

Back in September when I announced Pathom 3, I demonstrated a few benchmarks to show a rewrite’s potential. Now after a few months, the runner got: Placeholders, Unions, Parameters, Resolver Cache, Error handling, Batching, Execution analysis, and Mutations.

With all these new features, I think it’s an excellent time to take new measurements.

For the benchmarks I’ll use the following resolvers:

(defn slow-param-transform [config]

(update config ::pco/resolve

(fn [resolve]

(fn [env input]

(when-let [delay (-> (pco/params env) :delay)]

(time/sleep-ms delay))

(resolve env input)))))

(defn items-resolver [count]

(let [kw (keyword (str "items-" count))]

(pco/resolver (symbol (str "items-" count))

{::pco/output [{kw [:id]}]

::pco/cache? false}

(fn [{:keys [n]} _]

{kw (mapv (fn [_] (hash-map :id (vswap! n inc))) (range count))}))))

(defn gen-resolvers [attr]

(let [attr-s (name attr)]

[(pco/resolver (symbol attr-s)

{::pco/input [:id]

::pco/output [attr]

::pco/transform slow-param-transform}

(fn [_ {:keys [id]}] {attr (* 10 id)}))

(let [batch-attr (keyword (str attr-s "-batch"))]

(pco/resolver (symbol (str attr-s "-batch"))

{::pco/input [:id]

::pco/output [batch-attr]

::pco/batch? true

::pco/transform slow-param-transform}

(fn [_ ids]

(mapv #(hash-map batch-attr (* 10 (:id %))) ids))))]))

(def env-indexes

(pci/register

[(items-resolver 1)

(items-resolver 10)

(items-resolver 100)

(items-resolver 1000)

(items-resolver 10000)

(gen-resolvers :x)

(gen-resolvers :y)

; complex route

(pbir/constantly-resolver :a 1)

(pbir/single-attr-resolver :g :b inc)

(pbir/constantly-resolver :c 2)

(pbir/constantly-resolver :e 1)

(update (pbir/constantly-resolver :e 3) :config assoc ::pco/op-name 'e1)

(pbir/single-attr-resolver :e :f inc)

(update (pbir/constantly-resolver :g 4) :config assoc ::pco/input [:c :f])

(update (pbir/constantly-resolver :h 5) :config assoc ::pco/input [:a :b])]))

(defn base-env []

(assoc env-indexes :n (volatile! 0)))Single read

As I did last time, the first benchmark measures the time to do the minimal work to get some result of Pathom. It’s a query with a single attribute, which requires a single resolver call to realize.

[:x]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Cached Plan | 0.007ms | 1.000x |

| Pathom 3 | 0.035ms | 4.810x |

| Pathom 2 Serial | 0.058ms | 7.878x |

| Pathom 2 Async | 0.102ms | 13.833x |

| Pathom 2 Parallel | 0.144ms | 19.509x |

Before moving on, let’s understand what the bars represent, from left to right:

p3-serial-cp: Pathom 3 serial parser, using a persistent plan cache. This means that the planning part of the operation is already pre-cached. It’s common for API’s to make the same queries over and over, this provides the opportunity to cache this step, and you may expect a high cache hit rate on this plan cache. They are based on the query and the available data.p3-serial: Pathom 3 serial, without any pre-cache. Keep in mind that even if there is no pre-cache, Pathom will make a request level plan cache anyway. When there is a collection in the process, the plan is cached and re-utilized between the collection items.p2-serial: Pathom 2 using the serial parser andreader2.p2-async: Pathom 2 using the async parser andasync-reader2.p2-parallel: Pathom 2 using the parallel parser andparallel-reader.

Complex read

This example demonstrates a case where a single attribute requires a complex chain of resolvers to get fulfilled.

[:h]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Cached Plan | 0.042ms | 1.000x |

| Pathom 3 | 0.213ms | 5.105x |

| Pathom 2 Serial | 0.535ms | 12.848x |

| Pathom 2 Async | 1.056ms | 25.370x |

| Pathom 2 Parallel | 1.636ms | 39.285x |

In this example, we can see the cache plan was even more effective, which makes sense given this requires the generation of many nodes while previous was a plan with a single node.

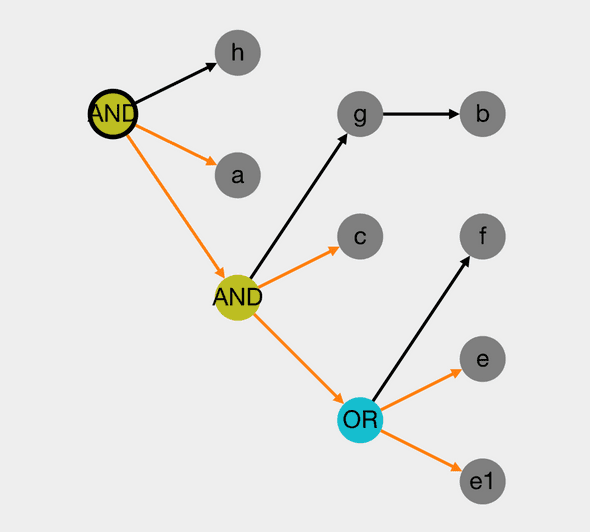

Here is what the process of :h looks like in this example:

tip

If you are not familiar with how Pathom 3 planing works and like to know more about the nodes generation check this documentation page.

Sequence of 1000 items

In this example, we will look at how long it takes for Pathom to process a collection with 1000 items in it, a simple resolver call on each.

[{:items-1000 [:x]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Cached Plan | 12.584ms | 1.064x |

| Pathom 3 | 11.823ms | 1.000x |

| Pathom 2 Serial | 108.639ms | 9.189x |

| Pathom 2 Async | 226.384ms | 19.147x |

| Pathom 2 Parallel | 81.870ms | 6.924x |

In this, we can see that Pathom 3 gets about eight times faster for raw sequence processing when compare to Pathom 2 serial.

Sequence of 1000 items in batch

Sample as the previous, but now with batched value processing.

[{:items-1000 [:x-batch]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Cached Plan | 16.172ms | 1.061x |

| Pathom 3 | 15.241ms | 1.000x |

| Pathom 2 Serial | 113.813ms | 7.468x |

| Pathom 2 Async | 223.306ms | 14.652x |

| Pathom 2 Parallel | 57.038ms | 3.742x |

Same similar gains in batching.

Nesting 10 > 10 > 10

Testing nesting, 3 levels down, 10 items per level.

[{:items-10

[:x

{:items-10

[:y

{:items-10

[:x]}]}]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Cached Plan | 17.720ms | 1.027x |

| Pathom 3 | 17.258ms | 1.000x |

| Pathom 2 Serial | 129.452ms | 7.501x |

| Pathom 2 Async | 282.742ms | 16.383x |

| Pathom 2 Parallel | 106.562ms | 6.175x |

Nesting 100 > 10, batch head

Nesting with batching, the batch occurring at the top.

[{:items-100

[:x-batch

{:items-10

[:y]}]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Cached Plan | 13.949ms | 1.000x |

| Pathom 3 | 14.493ms | 1.039x |

| Pathom 2 Serial | 126.786ms | 9.090x |

| Pathom 2 Async | 260.164ms | 18.652x |

| Pathom 2 Parallel | 112.960ms | 8.098x |

Nesting 100 > 10, batch tail

Nesting with batching, the batch occurring at tail items.

[{:items-100

[:y

{:items-10

[:x-batch]}]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Cached Plan | 17.702ms | 1.000x |

| Pathom 3 | 18.023ms | 1.018x |

| Pathom 2 Serial | 133.504ms | 7.542x |

| Pathom 2 Async | 268.601ms | 15.174x |

| Pathom 2 Parallel | 53.008ms | 2.995x |

Sequence of 100 items, batch with 200ms delay

[{:items-100

[(:x-batch {:delay 200})]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Cached Plan | 205.625ms | 1.000x |

| Pathom 3 | 205.644ms | 1.000x |

| Pathom 2 Serial | 220.460ms | 1.072x |

| Pathom 2 Async | 237.340ms | 1.154x |

| Pathom 2 Parallel | 212.722ms | 1.035x |

This is a good example of how that when some resolvers are costly, the noticeable overhead gets reduced.

Nesting 10 > 10, batch with 20ms delay

[{:items-10

[{:items-10

[(:x-batch {:delay 20})]}]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Cached Plan | 25.972ms | 1.009x |

| Pathom 3 | 25.729ms | 1.000x |

| Pathom 2 Serial | 258.815ms | 10.059x |

| Pathom 2 Async | 281.882ms | 10.956x |

| Pathom 2 Parallel | 77.157ms | 2.999x |

Continuous benchmarking

This time I made those benchmarks so that it will be easier to keep running them over time (they may go to a pipeline soon). I hope with this, we can avoid performance surprises due to the changes to come. It’s also a platform for trying out features and seeing if they cause impact the Pathom speed. This is just the beginning of Pathom benchmarking!

Plugins & Error handling

I was a bit concerned to add plugins before having these benchmarks, now that I can test out how the plugins architecture will affect performance I feel more comfortable starting it, which is the next thing on Pathom 3!

Error handling is related to plugins too, I’ve been silent working on it for some time, and I see that to complete the error handling story, I need plugins first. I’ll be writing more about those soon.

That’s what I have for today. Happy holidays everyone!

Follow closer

If you like to know in more details about my projects check my open Roam database where you can see development details almost daily.

Support my work

I'm currently an independent developer and I spent quite a lot of my personal time doing open-source work. If my work is valuable for you or your company, please consider supporting my work though Patreon, this way you can help me have more available time to keep doing this work. Thanks!

Current supporters

And here I like to give a thanks to my current supporters: