Pathom Updates 12

March 12, 2022

Hello everyone! It’s been a while since we had a Pathom Update post, so let’s catch up on what’s been happening since the last one!

Pathom Viz

Pathom Viz got some love! Let’s see the updates.

Proportional resize panels

A long-standing issue for me was the resize handlers around all the app. In the past, they were based on absolute pixel sizes, which is terrible when we resize the app because some panels might just completely collapse.

Now that’s changed, and all the sizes are based on percentages, this way as you resize the full window, the panels will keep the proportional sizes.

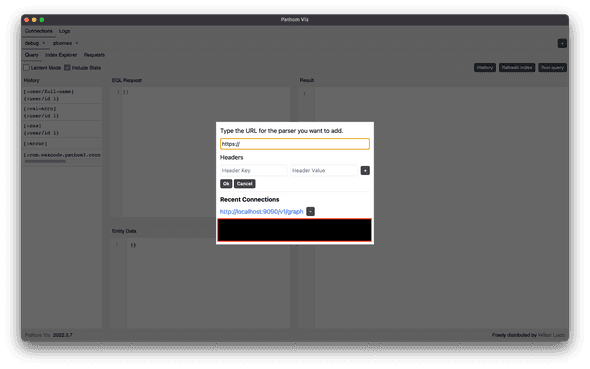

Improvements to connect via URL

Now when connecting via URL you can send custom headers. This will enable the usage passing an API token to authenticate your calls.

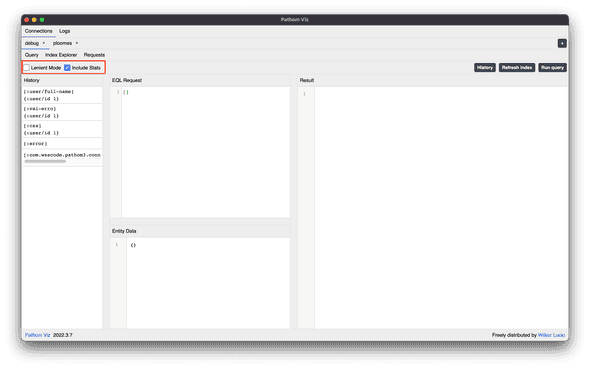

Pathom 3 query settings

I have added two new checkboxes when connecting to a Pathom 3 client. You can toggle if you want lenient mode active, or/and if you want to include or not the trace data.

Pathom 3 entity data on query history

A new feature for Pathom 3, now you can see in the query history the entity data used in that request.

Record Entity data on query history

The query history now remembers both the query and the entity data used, so you can come back to a past query in a click.

Parallel Processor

The parallel processor is here!

In Pathom 3, the parallel processor leverages the planner and have an implementation that’s much simpler than the Pathom 2 counterpart, resulting in an algorithm that requires fewer resources.

The parallel processor is an extension of the async processor. You can find details on how to use the parallel processor at https://pathom3.wsscode.com/docs/async/#parallel-process.

Benchmarks

It’s been a while since we have some performance comparisons, last time Pathom 3 was in very early stages, and lacking features makes it easier to go faster. Now that Pathom 3 is close to feature complete, how is it performing? Let’s find out!

note

I ran all the benchmarks in the JVM on a Macbook Pro 2019, 2.4 GHz 8-Core Intel Core i9, 64GB memory.

I used the criterium library for the measurements.

Single read

As I did last time, the first benchmark measures the time to do the minimal work to get some result of Pathom. It’s a query with a single attribute, which requires a single resolver call to realize.

[:x]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Serial Cached Plan | 0.016ms | 1.000x |

| Pathom 3 Serial | 0.018ms | 1.134x |

| Pathom 3 Async Cached Plan | 0.069ms | 4.456x |

| Pathom 3 Async | 0.062ms | 3.977x |

| Pathom 3 Parallel Cached Plan | 0.040ms | 2.543x |

| Pathom 3 Parallel | 0.045ms | 2.866x |

| Pathom 2 Serial | 0.040ms | 2.564x |

| Pathom 2 Async | 0.082ms | 5.284x |

| Pathom 2 Parallel | 0.125ms | 8.027x |

Before moving on, let’s understand what the bars represent, from left to right:

p3-serial-cp: Pathom 3 serial parser, using a persistent plan cache. This means that the planning part of the operation is already pre-cached. It’s common for APIs to make the same queries over and over, this provides the opportunity to cache this step, and you may expect a high cache hit rate on this plan cache. They are based on the query and the available data.p3-serial: Pathom 3 serial, without any pre-cache. Remember that even if there is no pre-cache, Pathom will make a request level plan cache anyway. When there is a collection in the process, the plan is cached and re-utilized between the collection items.p3-async-cpPathom 3 using async processor with cached planp3-asyncPathom 3 using async processorp3-parallel-cpPathom 3 using parallel processor with cached planp3-parallelPathom 3 using parallel processorp2-serial: Pathom 2 using the serial parser andreader2.p2-async: Pathom 2 using the async parser andasync-reader2.p2-parallel: Pathom 2 using the parallel parser andparallel-reader.

Complex read

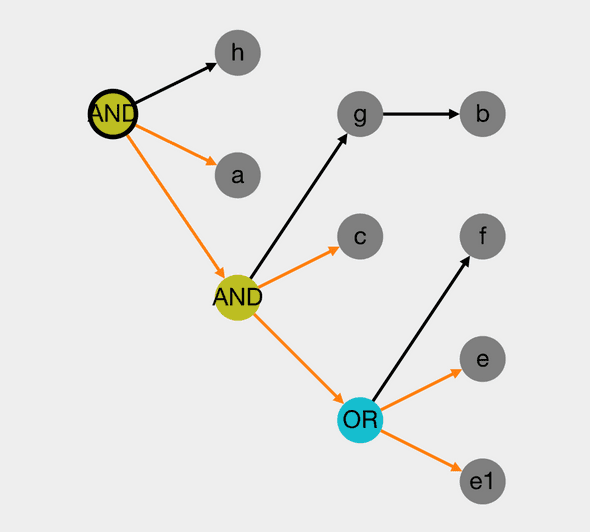

This example demonstrates a case where a single attribute requires a complex chain of resolvers to get fulfilled.

[:h]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Serial Cached Plan | 0.023ms | 1.000x |

| Pathom 3 Serial | 0.041ms | 1.756x |

| Pathom 3 Async Cached Plan | 0.054ms | 2.322x |

| Pathom 3 Async | 0.079ms | 3.383x |

| Pathom 3 Parallel Cached Plan | 0.037ms | 1.592x |

| Pathom 3 Parallel | 0.072ms | 3.094x |

| Pathom 2 Serial | 0.593ms | 25.537x |

| Pathom 2 Async | 1.122ms | 48.301x |

| Pathom 2 Parallel | 1.331ms | 57.302x |

In this example, we can see the cache plan was even more effective, which makes sense given this requires the generation of many nodes while previous was a plan with a single node.

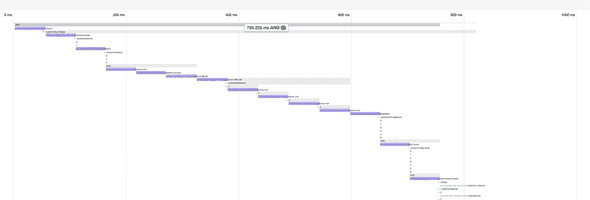

Here is what the process of :h looks like in this example:

tip

If you are not familiar with how Pathom 3 planning works and like to know more about the nodes generation check this documentation page.

Sequence of 1000 items

In this example, we will look at how long it takes for Pathom to process a collection with 1000 items in it, a simple resolver call on each.

[{:items-1000 [:x]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Serial Cached Plan | 26.191ms | 1.000x |

| Pathom 3 Serial | 27.098ms | 1.035x |

| Pathom 3 Async Cached Plan | 44.104ms | 1.684x |

| Pathom 3 Async | 46.486ms | 1.775x |

| Pathom 3 Parallel Cached Plan | 36.635ms | 1.399x |

| Pathom 3 Parallel | 36.540ms | 1.395x |

| Pathom 2 Serial | 104.952ms | 4.007x |

| Pathom 2 Async | 221.077ms | 8.441x |

| Pathom 2 Parallel | 71.848ms | 2.743x |

In this, we can see that Pathom 3 gets about four times faster for raw sequence processing when compared to Pathom 2 serial.

Sequence of 1000 items in batch

Sample as the previous, but now with batched value processing.

[{:items-1000 [:x-batch]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Serial Cached Plan | 30.523ms | 1.024x |

| Pathom 3 Serial | 29.815ms | 1.000x |

| Pathom 3 Async Cached Plan | 46.741ms | 1.568x |

| Pathom 3 Async | 47.478ms | 1.592x |

| Pathom 3 Parallel Cached Plan | 54.079ms | 1.814x |

| Pathom 3 Parallel | 53.074ms | 1.780x |

| Pathom 2 Serial | 102.560ms | 3.440x |

| Pathom 2 Async | 221.441ms | 7.427x |

| Pathom 2 Parallel | 60.993ms | 2.046x |

Same similar gains in batching.

Nesting 10 > 10 > 10

Testing nesting, 3 levels down, 10 items per level.

[{:items-10

[:x

{:items-10

[:y

{:items-10

[:x]}]}]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Serial Cached Plan | 26.138ms | 1.000x |

| Pathom 3 Serial | 26.573ms | 1.017x |

| Pathom 3 Async Cached Plan | 53.316ms | 2.040x |

| Pathom 3 Async | 53.056ms | 2.030x |

| Pathom 3 Parallel Cached Plan | 41.959ms | 1.605x |

| Pathom 3 Parallel | 42.299ms | 1.618x |

| Pathom 2 Serial | 75.318ms | 2.882x |

| Pathom 2 Async | 166.487ms | 6.370x |

| Pathom 2 Parallel | 78.092ms | 2.988x |

Nesting 100 > 10, batch head

Nesting with batching, the batch occurring at the top.

[{:items-100

[:x-batch

{:items-10

[:y]}]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Serial Cached Plan | 26.738ms | 1.000x |

| Pathom 3 Serial | 27.626ms | 1.033x |

| Pathom 3 Async Cached Plan | 52.391ms | 1.959x |

| Pathom 3 Async | 54.656ms | 2.044x |

| Pathom 3 Parallel Cached Plan | 51.150ms | 1.913x |

| Pathom 3 Parallel | 53.442ms | 1.999x |

| Pathom 2 Serial | 78.397ms | 2.932x |

| Pathom 2 Async | 173.438ms | 6.487x |

| Pathom 2 Parallel | 68.285ms | 2.554x |

Nesting 100 > 10, batch tail

Nesting with batching, the batch occurring at tail items.

[{:items-100

[:y

{:items-10

[:x-batch]}]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Serial Cached Plan | 38.248ms | 1.039x |

| Pathom 3 Serial | 36.820ms | 1.000x |

| Pathom 3 Async Cached Plan | 77.091ms | 2.094x |

| Pathom 3 Async | 77.893ms | 2.116x |

| Pathom 3 Parallel Cached Plan | 75.088ms | 2.039x |

| Pathom 3 Parallel | 67.457ms | 1.832x |

| Pathom 2 Serial | 93.422ms | 2.537x |

| Pathom 2 Async | 225.680ms | 6.129x |

| Pathom 2 Parallel | 77.276ms | 2.099x |

Sequence of 100 items, batch with 200ms delay

[{:items-100

[(:x-batch {:delay 200})]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Serial Cached Plan | 210.946ms | 1.000x |

| Pathom 3 Serial | 212.334ms | 1.007x |

| Pathom 3 Async Cached Plan | 214.686ms | 1.018x |

| Pathom 3 Async | 216.780ms | 1.028x |

| Pathom 3 Parallel Cached Plan | 221.750ms | 1.051x |

| Pathom 3 Parallel | 218.851ms | 1.037x |

| Pathom 2 Serial | 224.137ms | 1.063x |

| Pathom 2 Async | 240.222ms | 1.139x |

| Pathom 2 Parallel | 217.378ms | 1.030x |

This is a good example of how when some resolvers are costly, the noticeable overhead gets reduced.

Nesting 10 > 10, batch with 20ms delay

[{:items-10

[{:items-10

[(:x-batch {:delay 20})]}]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Serial Cached Plan | 33.437ms | 1.003x |

| Pathom 3 Serial | 33.327ms | 1.000x |

| Pathom 3 Async Cached Plan | 35.565ms | 1.067x |

| Pathom 3 Async | 35.890ms | 1.077x |

| Pathom 3 Parallel Cached Plan | 41.128ms | 1.234x |

| Pathom 3 Parallel | 40.723ms | 1.222x |

| Pathom 2 Serial | 40.241ms | 1.207x |

| Pathom 2 Async | 55.795ms | 1.674x |

| Pathom 2 Parallel | 40.733ms | 1.222x |

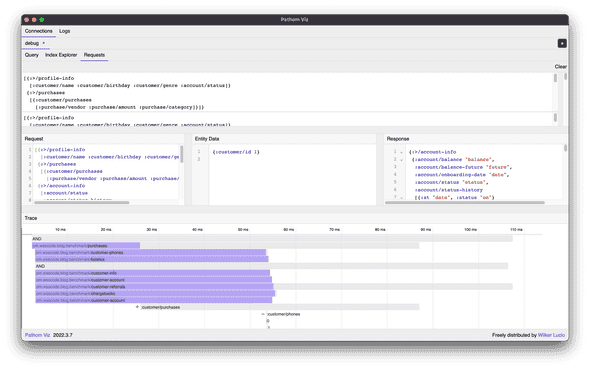

Complex read structure

This example is closer to what a complex user request looks like. Where parts are independent and the parallel features can give a real boost in the speed:

[{:>/profile-info

[:customer/name

:customer/birthday

:customer/genre

:account/status]}

{:>/purchases

[{:customer/purchases

[:purchase/vendor

:purchase/amount

:purchase/category]}]}

{:>/account-info

[:account/status

:account/status-history

:account/onboarding-date

:account/balance

:account/balance-future]}

{:>/chargebacks

[{:customer/chargebacks

[:chargeback/date

:chargeback/status]}]}

{:>/credit-cards

[{:account/credit-cards

[:credit-card/masked-number

:credit-card/status]}]}

{:>/boletos

[{:customer/boletos

[:boleto/number

:boleto/paid?]}]}

{:>/mgm

[{:customer/referrals

[:customer/name]}]}

{:>/phones

[{:customer/phones

[:phone/id

:phone/number]}]}]| Runner | Mean | Variance |

|---|---|---|

| Pathom 3 Serial Cached Plan | 819.869ms | 6.774x |

| Pathom 3 Serial | 821.332ms | 6.786x |

| Pathom 3 Async Cached Plan | 789.007ms | 6.519x |

| Pathom 3 Async | 791.661ms | 6.541x |

| Pathom 3 Parallel Cached Plan | 121.029ms | 1.000x |

| Pathom 3 Parallel | 125.183ms | 1.034x |

| Pathom 2 Serial | 807.025ms | 6.668x |

| Pathom 2 Async | 820.699ms | 6.781x |

| Pathom 2 Parallel | 164.130ms | 1.356x |

As we can see, the parallel processors shine here, Pathom 3 being the fastest. Its also worth to note that the Pathom 3 implementation uses significantly fewer resources than the Pathom 2 due to the new parallel implementation.

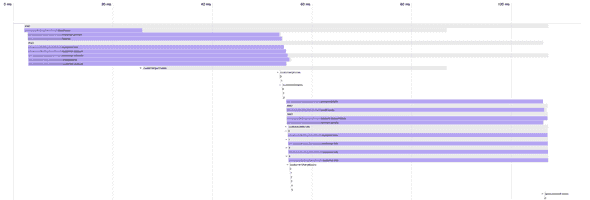

I think it’s interesting to see the trace of the parallel process vs the serial in this case:

Here you can find the full sources for these benchmarks: https://gist.github.com/wilkerlucio/f2a52d032101a5c436045e621e331b4c

Pathom 3 GraphQL

Pathom 3 GraphQL got an official release!

If you want to integrate some GraphQL source into your Pathom system, check it out at https://github.com/wilkerlucio/pathom3-graphql.

Follow closer

If you like to know in more details about my projects check my open Roam database where you can see development details almost daily.

Support my work

I'm currently an independent developer and I spent quite a lot of my personal time doing open-source work. If my work is valuable for you or your company, please consider supporting my work though Patreon, this way you can help me have more available time to keep doing this work. Thanks!

Current supporters

And here I like to give a thanks to my current supporters: